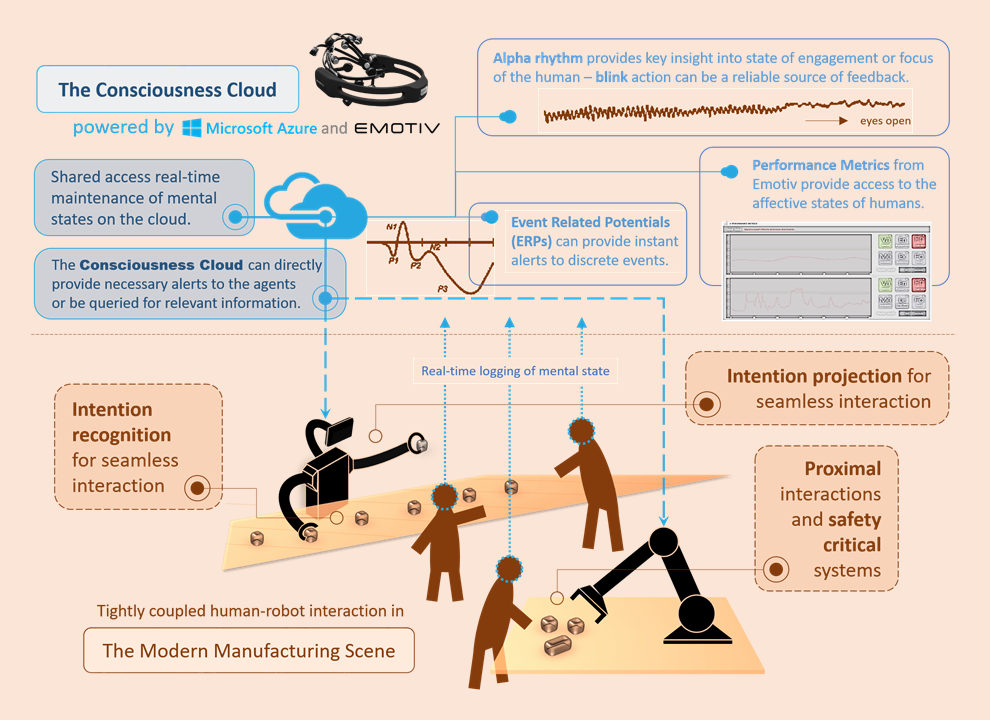

The Consciousness Cloud provides the robots real-time shared access to the mental state of all the humans in the workspace. We identify different modalities of EEG-based feedback, such as (1) Event Related Potentials or ERPs (e.g. p300) that can provide insight into the human's responses like surprise to discrete events; (2) Affective States like stress, valence, anger, etc. that can provide longer term feedback on how the human evaluates interactions with the robot; and finally (3) Alpha Rhythm that can relate to factors such as task engagement and focus of the human teammate. The Consciousness Cloud logs the mental state of all the humans in the shared workspace and makes it available to the robots in the form of a real-time accessible representation of the mental model of the humans. The agents can thus choose to (1) query the Consciousness Cloud about particulars (e.g. stress levels) of the current mental state; or (2) receive specific alerts related to the human's response to events (e.g. oddball incidents like safety hazards and corresponding p300 spikes) in the environment.

System Architecture

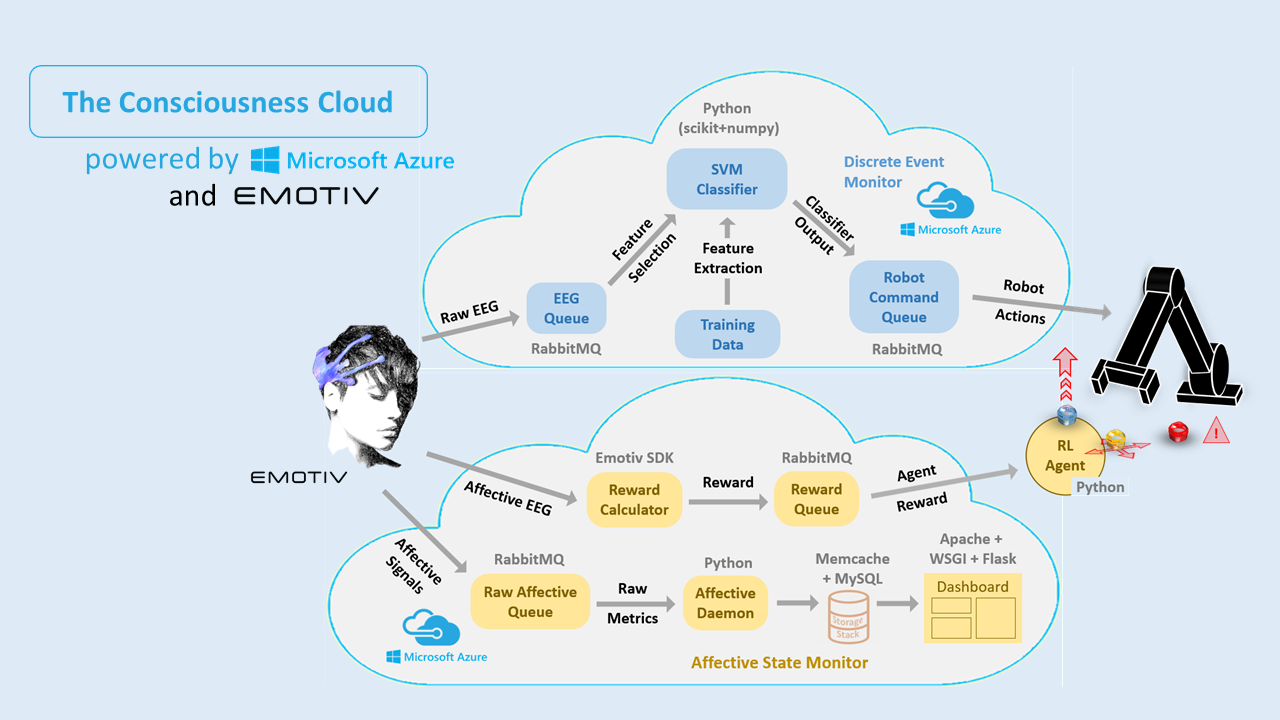

The Consciousness Cloud has two components - the affective state monitor and the discrete event monitor. In the affective state monitoring system, metrics corresponding to affective signals recorded by the Emotiv EPOC+ headset are directly fed into a rabbitMQ queue called “Raw Affective Queue” to be used for visualization, and a reward signal (calculated from the metrics) is fed into the “Reward Queue”. The robot directly consumes the “Reward Queue” and the signals that appears during an action execution is considered as the action reward or environment feedback by the AI agent (implementing a reinforcement learning agent). For the discrete event monitoring system, the raw EEG signals from the brain are sampled and written to a rabbitMQ queue called “EEG queue”. This queue is being consumed by our Machine learning or classifier module, which is a python daemon running on a azure server. When this python daemon is spawned it trains an SVM classifier using a set of previously labelled EEG signals. The signals consumed from the queue are first passed through a feature extractor and then the extracted features are used by the SVM to detect specific events (e.g. blinks). For each event a corresponding command is send to the “Robot Command” queue, which is consumed by the robot. For example, if a STOP command is send for the blink event, it would cause the robot to halt its current operation.